A proposal for solving social media censorship

We need a law that makes social giants like Facebook liable for the posts they push at us, but not the ones from the people we follow.

Joe Rogan (and guests) said things. Some of those things could inspire listeners to fear the covid vaccine more than covid, causing needless deaths.

(Note, this full issue is free to all subscribers. Enjoy!)

It matters because Rogan is a big deal. Rogan is the world’s biggest podcaster. The Joe Rogan Experience, exclusive to Spotify, enjoys audiences that dwarf any political commentator on TV.

Rogan’s podcasting shtick is that he’s an incorrigible stoner having endless late-night conversations about things he doesn’t understand. With a good guest, it’s a relatable journey of discovery that brings his audience along. With a bad guest, it’s a source of disinformation Rogan’s fans might be persuaded by.

So a movement started on social media to boycott Spotify. Famous singers, including Neil Young, called for Spotify to remove their music in protest, and Spotify complied. Thousands of people canceled their Spotify accounts. Spotify’s market valuation lost $2 billion.

Rogan supporters shouted: CENSORSHIP!!

Except nothing about this story has anything to do with censorship. Rogan is still America’s #1 most influential voice. And even if he left Spotify, it would be easy for him to start his own podcast. Joe Rogan is uncensorable and uncancelable, thanks to both the US Constitution and the podcast medium, which has no gatekeepers.

But it does add to the perennial conversation about the role of technology companies in governing speech.

In truth, Spotify isn’t really central to that conversation. But Facebook, Twitter and other social networks are.

Are social networks more like telephone companies… or publishers?

Our laws governing all this are obsolete. In the past, we categorized all communications media into one of two slots: Either they were a “common carrier,” like the telephone company, or they were a “publisher,” like a newspaper.

AT&T couldn’t be sued for slander or hate speech if two people committed those acts during a phone call. But the New York Times could be sued for libel or hate speech if an opinion columnist said the same words.

So which of these do Facebook and Twitter fit into?

Social sites let everyone talk to everyone like a telephone. But the companies themselves also choose which content to publish or not publish. Social sites routinely censor: Child Sex Abuse Material or CSAM (the crime formerly known as child pornography), racist hate speech, terrorist recruitment content, incitements of violence and, most recently, vaccine-related misinformation.

They also have algorithmically filtered feeds. Facebook, for example, shows you only around 10% of the posts from the people and companies you followed. Plus, it adds content to your feed from people and companies you never followed. Facebook is heavy-handedly deciding what you see on Facebook.

Twitter’s default view doesn’t filter, but it does prioritize, deciding what comes first. For most users, that reshuffling means you’ll never see the stuff at the bottom, even if it’s there, theoretically.

Liberals complain that social sites amplify hate speech and calls to violence by right-wing radicals. Some advocate and participate in social media shaming to “cancel” offenders.

Conservatives complain that social sites are controlled by extreme leftists who censor conservative speech. Some call for laws to prevent social media companies from engaging in this “censorship.”

Both “cancel culture” and government intervention are bad solutions. What we really need is to update our laws around speech liability and bring the old common-carrier/publisher issue into the 21st Century.

Why free speech should be our priority

There’s confusion (sometimes deliberate) about whether Twitter banning the account of some politician is a violation of the First Amendment. (It isn’t; the Amendment bans Congress from restricting free speech, not Twitter.)

We’re so polarized now, and so many people are drunk on clobbering the “other side,” that free speech is in peril. We need to reaffirm not only the Constitutional protection of free speech, but also advocate for free speech in the “public square” — i.e. social media.

It helps to remember that objectionable speech is the only kind that needs protecting.

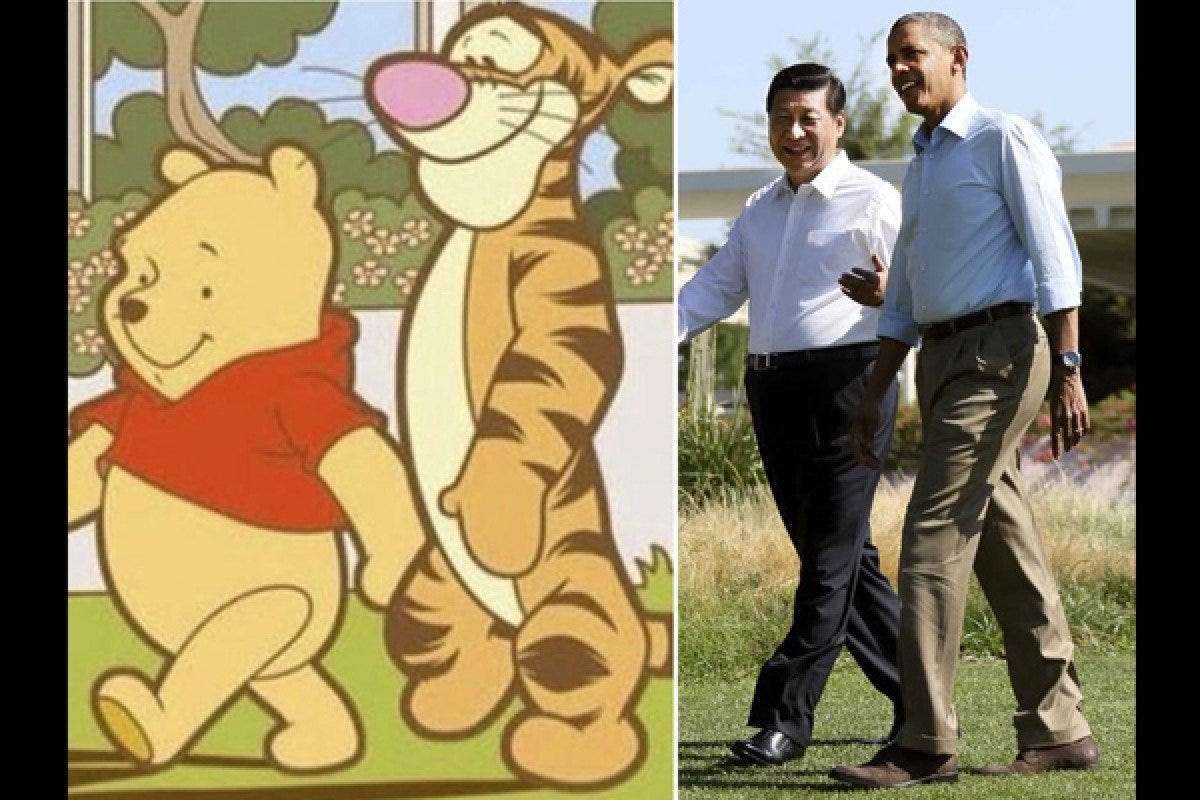

Most meaningful speech is objectionable to someone. And even meaningless speech can offend. In China, mention of Winnie the Pooh is banned on social media because of a meme that circulated years ago suggesting that President Barack Obama looks like Tigger and President Xi Jinping looks like Pooh. So now you can’t say “Winnie the Pooh” on Chinese social media.

Sometimes objectionable speech is: “The Earth is flat.” But other times objectionable speech is: “The Earth revolves around the sun.”

We need to protect all speech because 1) we can’t always be sure which new ideas are better; 2) one group should not decide for other groups what they should be talking about; 3) sometimes the road to good ideas is paved with bad ones; 4) exposure to bad ideas helps us understand why good ideas are good; 5) censorship takes away human freedom and agency and we want to live in a free society; and 6) censorship confers victim status on the censored, which is a tool of recruitment and power.

All that is why anti-censorship advocates say the cure for bad speech is more speech.

And that’s why we need to fix the legal status of social networks.

So are Facebook and Twitter common carriers or publishers?

The answer is yes.

The fix for the common-carrier/publisher conundrum is to acknowledge that social networks are both.

When you follow me on Twitter and read and comment on something I post, that’s just like a phone conversation. Nothing we say should be subject to censorship, just like on the telephone or just like a conversation on a sidewalk. Nobody is calling for monitoring all phone calls and putting microphones everywhere so that telephone companies and sidewalk makers can be sued or prosecuted for enabling such conversations.

But when you follow me on Facebook (I’m not on Facebook — this is hypothetical) and Facebook’s algorithm chooses to not show my post in your News Feed, but instead post something from someone you don’t follow because it’s viral and trending, then Facebook in that instance is acting as a “publisher,” choosing what you see and what you do not see.

The problem with objectionable speech on social media is not that people are saying bad things. It’s that people who never chose to hear bad things get overwhelmed with it because the companies make them go viral — emotions drive clicks, eyeballs and advertising dollars.

Most of the “trending” links you see on the right of your Twitter feed on the web version goes to political trolls who say crazy things on purpose to get attention. Because in an attention economy, there’s no such thing as bad publicity. Twitter is choosing to prioritize that dreck, essentially driving the public square conversation around publicity stunts, trolling and nonsense.

The metaphor is that, yes, people really do slow down on the highway to rubber-neck car accidents. Social media algorithms make sure to dangle car accidents in front of you all day because that’s what you’re slowing down for. So our town square is overwhelmed by car accidents instead of constructive discourse.

Of course, media outlets can and should be able to report on slander, libel, hate speech and so on, without being sued for committing those offenses. And Twitter or Facebook amplifying such content could be considered akin to a news organization quoting it. But reporting typically houses a quote or paraphrasing, completely enveloped in a contextualization that should include oppositional content. When social sites amplify, they amplify the entire message uncontextualized. The effect is really as if they’re publishing it themselves. It’s more akin to a newspaper publishing a story from a news agency like Reuters. They’re communicating the content itself, not mentioning it or reporting on it.

Why my proposal is unacceptable

It seems obvious, at least to me, that this should be the legal reality for social networks:

If a user says it to a follower, the social network has common carrier status in that instance.

If the social network chooses to pick it up and spread it, the social network has publisher status for that post.

If someone you follow re-shares or re-tweets objectionable content, then you’re free to block or unfollow that person, or use keywords to block posts preemptively.

In other words, the legal liability for social media posts should depend on who decided to show it to me. If it’s someone I chose to follow, then the company shouldn’t be held liable. But if Facebook or Twitter chose to show it to me, they're acting as a publisher exercising editorial decision-making and are therefore liable for the post. That means they can be sued, prosecuted, etc.

This new legal framework would have two major effects:

Social networks would have to accept their role for editorial decision-making, and make careful decisions about what to amplify and send into the viral stratosphere, instead of letting their algorithms run wild no matter what the effect. Social networks would make less money.

More highly objectionable speech would exist on social networks, but would not go viral. You couldn’t see it unless you chose to see it. Horrible people wouldn’t get booted off social networks for saying horrible things.

And these two effects are why my proposal will be unacceptable to most people. First, journalists, pundits and the public tend to reflexively defend the right of social media giants like Facebook to have secret algorithms that determine what everyone sees, knows and believes. People also defend their right to make profits of many billions of dollars each quarter.

And far too many people will want objectionable speech censored on social networks, even if those conversations are taking place exclusively among consenting adults. Far too many people don’t think beyond: “I don’t like it; they should ban it.”

Because we’re unwilling to prioritize functional democracy and cohesive society over the profits of giant corporations and because we are insufficiently committed to free speech when it comes to speech we disagree with, none of this will happen.

So we’ll continue to remain stuck in the mud of having both objectionable content pushed at us from secret algorithms AND censorship by corporations.

The solution is simple. But I don’t think the public wants it.

What do you think?

Mike’s List of Brilliantly Bad Ideas

1. Cheap beer you can’t drink comes to the “metaverse,” which doesn’t exist

Miller Lite is hatching a publicity stunt to host a "virtual bar" in the "metaverse" during the Super Bowl February 13. Never mind that the "metaverse" doesn't exist. The “event” is taking place on a website called Decentraland, which existed before Mark Zuckerberg tricked everyone into using the word "metaverse." Anyway, this “metaverse” is just another world-building website where you’ll get to move your avatar around a "bar" with other avatars.

2. Beijing Olympic restaurant drops food from the ceiling

One restaurant for the media at the Olympic venue in Beijing delivers food from the ceiling to eating spaces separated by Plexiglas dividers via an elaborate robotic system to minimize face-to-face contact between humans. The food is prepared by robots and operates 24 hours a day.

3. Japanese 7-Elevens get holographic self-checkout registers

Six 7-Eleven stores in Japan are getting self-checkout, non-contact holographic cash registers tomorrow. The registers work more or less like any self-checkout grocery system. The difference is that the display and buttons hover in the air. Because covid.

4. This cheap anti-surveillance gadget fits on your key chain

Some people live full time in AirBnBs, moving from one to the next. Like AirBnB CEO Brian Chesky. Or me. One remote risk is that some creepy host or former guest might plant a hidden camera. Also: A growing crime in China is the planting of hidden cameras in hotel rooms, which stream live to paying perverts. Either way, a new gadget that fits on your keychain called the DENSOR lets you detect hidden cameras. Just look through the lens and press the buttons and any camera will light up red.

5. Finally: Batteries get screens!

Portable batteries are great. If your phone's battery dies — or the battery in your camera, tablet or laptop — you can charge it with the battery. But one startup figures, hey, since you're charging that device anyway, you might as well give it another screen. The “PDGO portable charging bank with display” hunts for a video stream coming through the cable you’re charging with. If it finds one, the device puts it on its 1080p screen. (This is especially cool with action cams like the GoPro, which have tiny screens but hold photos and videos you really want to look at while you're out and about.)

Mike’s List of Shameless Self Promotions

Can Google be trusted?

It’s time to talk about time. (From my new “Future of Work” newsletter! Get it delivered via email free here)

How to get reactive cybersecurity right

How kefir transforms your diet

I think it's logical and reasonable from a liability standpoint as it relates to the social media entities. I'm not sure it will do much to curb the urge to silence and marginalize the people we disagree with. I would think people will still follow the people they despise, report on the offensive things being said, and then proceed with the usual means of attack(employment, platform, and infrastructure).

The problem isn't necessarily "Free Speech"; it's more "Free Reach"...

For example: Trump, Dan Bongino, Alex Jones, Milo Yiannopoulos, they all can still speak publicly. But right now their audience is very niche. Particularly after they've been booted from major social networking services. Their reach cratered after the services finally publicly recognized how toxic these people are (and caved to extensive public pressure).

Also to note the last three names, I had to google them just now to remember their names for the purposes of this comment. I barely remember them today...