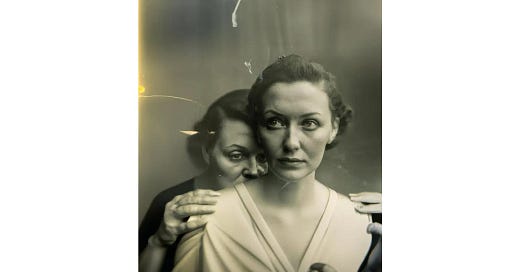

The deepfake singularity is here

We've reached a point in the quality of AI-generated videos and photos that even experts can't always tell what's real and what's fake.

Remember the deepfake panic of a few years ago? It started in early 2018 when a series of vaguely convincing videos emerged showing people saying things they never said. One famous demo featured actor and director Jordan Peele impersonating former President Barack Obama synched to a deepfake of the president. (The video was created using Adobe After Effects and the AI face-swapping tool FakeApp.)

The fear was that we would at some point in the future reach some kind of a deepfake singularity where prominent people, such as political leaders, could be shown saying damaging and incriminating things they never actually said, and that nobody could tell or prove that it was fake.

Back then I expressed my own fear that authentic and incriminating videos could be dismissed as deepfakes, enabling wrongdoers caught in the act to dismiss the evidence.

And, of course, the scourge of deepfake pornography (typically where celebrity or influencer faces are superimposed on porn actors) and AI-generated revenge porn has been a problem for at least six years, and growing worse all the time. The latest horror is the news that high school students are using the technology to victimize classmates. Even the crafts marketplace Etsy has been caught allowing deepfake porn.

Propaganda efforts around the Gaza conflict are driving the use of deefakes and LLM-based generative AI images to unprecedented heights. The vast majority of conflict news consumers can’t tell the difference, and don’t even question the authenticity of what they’re seeing.

I’m going to call it: We have now reached the feared deepfake singularity.

A new survey conducted by OnePoll and paid for by De Beers Group found that 74% of Americans can’t tell what’s real and what’s fake online. Around half aren’t sure if targeted ads and influencer content is real or AI-generated. And some 41% can’t tell if the products they’re considering buying online are real or fake. (And worse: More than half of respondents said they prefer the fake product if it’s cheaper.)

Deepfake videos have reached the political arena. Pakistan’s former prime minister Imran Khan delivered a four-minute video speech to millions of supporters at an online rally Sunday. But Khan is in jail. His party used AI from ElevenLabs to recreate his voice (Khan reportedly wrote the script). The video was captioned as AI-generated content.

Meanwhile, a Russian student surprised President Vladimir Putin recently with a deepfake that looked and sounded like Putin. The exchange was published on the social network formerly known as Twitter. Putin said it was his first AI “twin,” but that’s not true. In June, hackers broadcast a deepfake of Putin on Russian TV and radio where the fake Putin declared martial law, saying that Ukraine had invaded Russia.

Witness Executive Director, Sam Gregory, said in a TedTalk last month that even experts using AI tools can’t always tell for sure whether the most sophisticated videos are real or fake. What chance do ordinary content consumers have? (Witness is a human rights organization that helps people use video and technology to protect and defend their rights, and they focus on identifying deepfakes and working on technologies that can prove authentic videos are real.)

Mike’s List of Shameless Self-Promotions

The fear and hype around AI is overblown

It’s time to take your genAI skills to the next level

Read ELGAN.COM for more!

Mike’s Location: Silicon Valley, California

(Why Mike is always traveling.)