New AI trick: 'synthetic human memories'

AI-edited photos can alter memories of things you experienced in real life. This knowledge will be weaponized. Here comes the False Memory Industrial Complex.

We already know that AI-edited pictures, AI fakes, and deep fake videos can make large numbers of people believe false second-hand information. It's easy to convince people that the Pope rocks a big, white puffy jacket.

But in a disturbing new development, research from MIT and elsewhere has found that AI-edited photos can reliably induce false memories of events personally experienced.

The scientists called them "synthetic human memories."

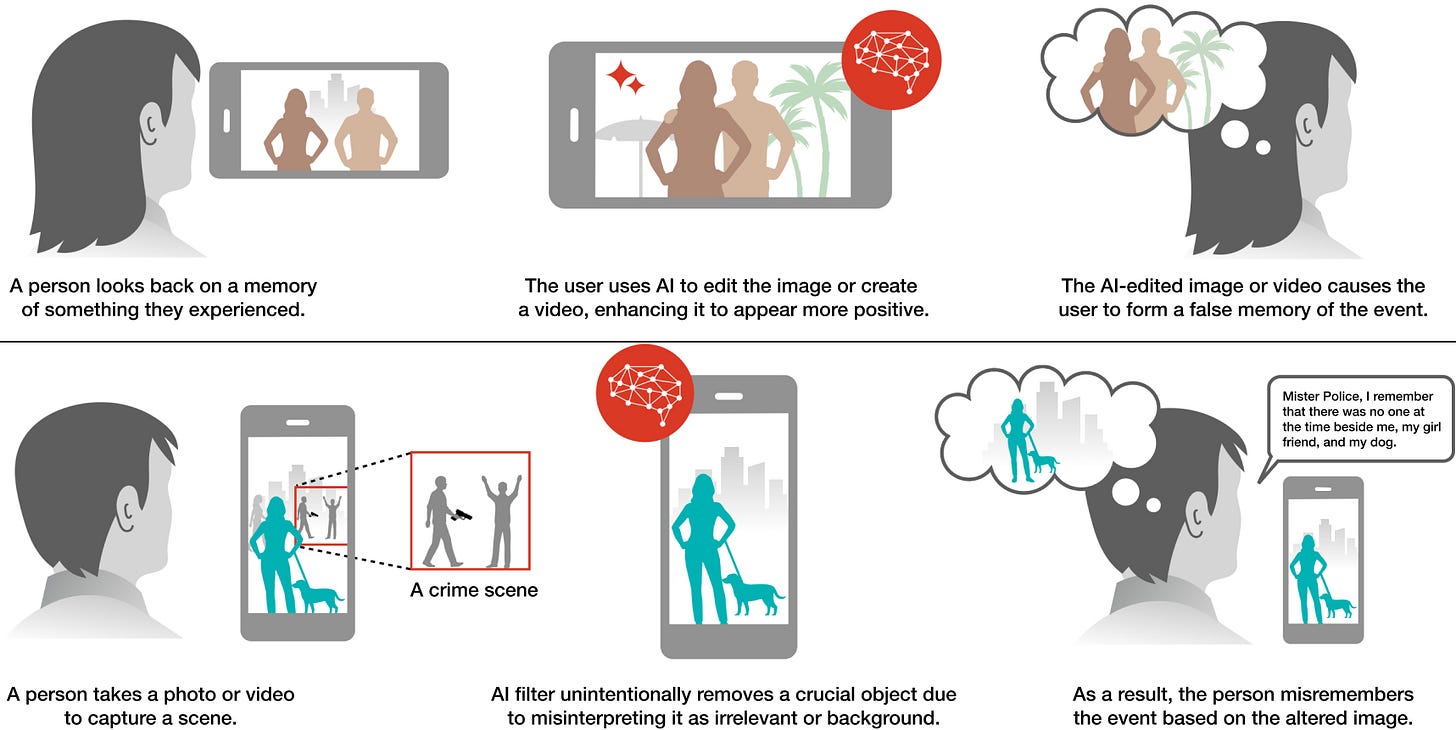

The research: 200 participants were shown unedited photographs. Then, they played Pac-Man for two minutes (to distract them from what they saw). They were shown the same photographs but edited with AI to change the facial features, race, ethnicity, body shape, or posture of people, or with added or removed objects or tweaked backgrounds, weather, buildings, or lighting.

They were then asked to remember and describe the first set of photos, not the second. Those shown AI-edited photos were far more likely to falsely remember AI-based changes to the unaltered images, with many certain that their false memories were true.

Interestingly, a subset shown the photos in video form were more likely to form false memories.

If I recall correctly, false memory syndrome is fairly common. Human memory is inexact, subject to "memory drift," and vulnerable to manipulation through suggestion. (Sometimes, aggressive, stressful police interrogation can convince innocent suspects that they're guilty of the crimes cops are badgering them about.)

Meanwhile, Meta this week announced plans to introduce AI-generated images into Facebook and Instagram feeds using Meta's Emu image generation model. They'll be labeled as AI-generated, but they will surely create false memories, especially on Facebook. (Facebook users often share photos of things that happened with family and friends there.) Using AI, users will be able to purge people who have fallen out of favor from snapshots (Stalin-style), leading others perhaps to falsely remember the absence of that person.

The risk is that with AI, false memories can be crafted precisely and induced on a larger scale.

Let's say, for example, that a presidential candidate wanted to convince voters that he was strongly endorsed by, say, Taylor Swift. He could lie about that at a rally, show AI-generated images of Swift support, and then email all attendees AI-edited photos of Swift literally at the rally, arm-in-arm with the candidate. Six months later, many attendees might swear they saw Swift at the rally with their own eyes.

A truly large-scale disinformation application might be a future in which a reflexive, almost automatic response by dishonest candidates, despots, authoritarians, oligarchs, or reputation management consultants responding to damaging photos or videos would be to immediately produce, then flood the zone with, AI-edited versions of those photos to make people believe the altered imagery was always the correct one and the picture they saw published in reputable publications — a deliberately engineered "Mandela Effect."

Never forget: Liars can now convince the public of false things they didn't personally experience — and also things they did.

Shameless Self-Promotion

What happens when everybody winds up wearing ‘AI body cams’?

What North Korea’s infiltration into American IT says about hiring

Apple embraced Meta’s vision (and Meta embraced Apple’s)

The rising threat of cyberattacks in the restaurant industry

More from Elgan Media!

My Location: Aït Benhaddou, Morocco

(Why Mike is always traveling.)

🌹🌻🌸💐💚💜❤️🌼😍🥰

screen addiction is the foundation on which these hells are built.