New TikTok tech lets you become anyone

ByteDance, the company behind TikTok, just accelerated our descent into a world where everything, and everyone, is fake.

My mother told me I could be anyone I wanted to be when I grew up. Turns out she was right.

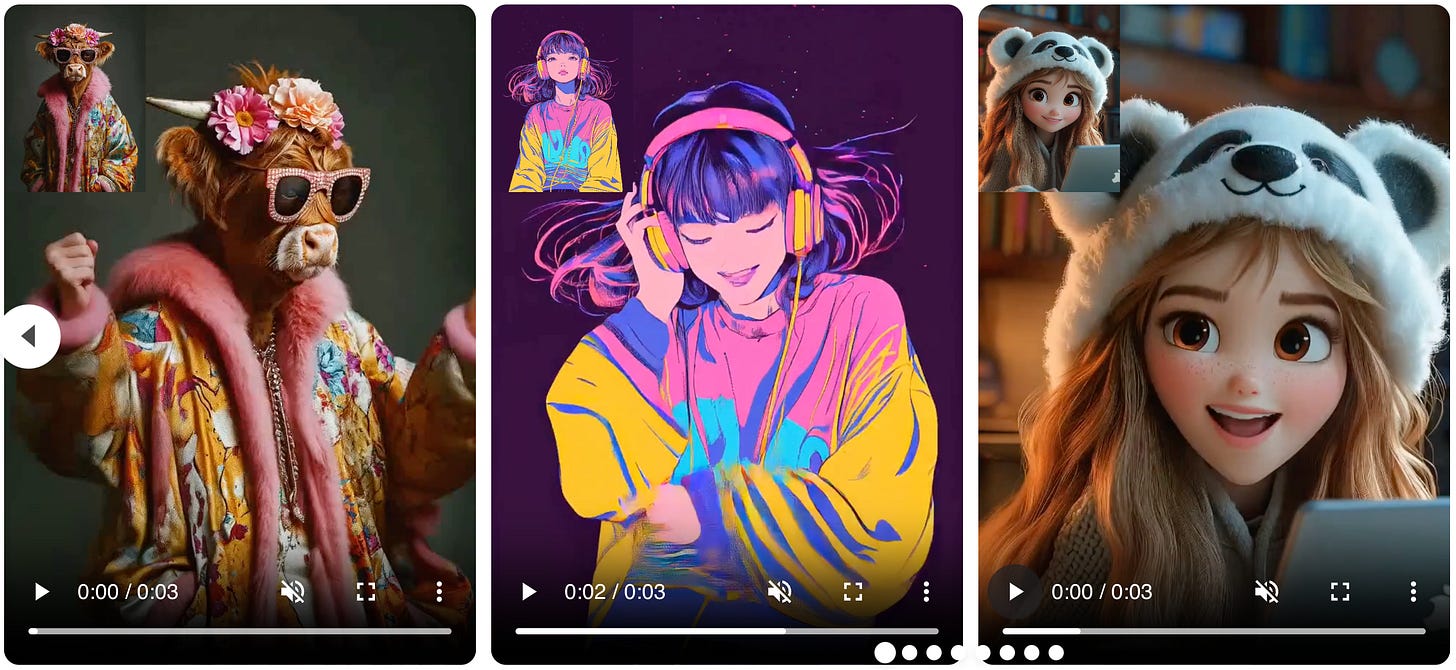

ByteDance (yes, the TikTok people) just unveiled an AI model called DreamActor-M1. It can take a single still image and turn it into a fully animated video with lifelike facial expressions, natural head movements, and even full-body motion.

Even now in its "beta" phase, or whatever, it's easy to use. Just upload a picture of one person or cartoon character, then upload a video of a person talking. DreamActor-M1 will create a new video with the same voice, facial expressions, body language, and hand gestures but performed by whomever is in the uploaded photo.

You can try it yourself here.

DreamActor-M1 uses a sophisticated AI architecture called a Diffusion Transformer (DiT). This framework enables the system to generate high-quality animations by combining data from the input image with movement cues extracted from a reference video or even an audio file. The result is an animation that feels authentic, for the most part.

Tech like this exists elsewhere. But what's different about DreamActor-M1 is its hybrid guidance system. Rather than one system that tries to animate everything, DreamActor-M1 has several specialist guidance systems: one for facial expressions, another for head orientation, and yet another for body movements.

The technology contains diverse datasets that include images and videos of people in different poses and scales. The AI maintains coherence over longer video sequences by using complementary appearance guidance. So if the top of someone's shirt is visible, the AI can conjure up the whole shirt.

ByteDance says people could use DreamActor-M1 for movie-making, video gaming, and other uses. But obviously, it's designed to enable anyone on TikTok to become anyone on TikTok.

It's the natural extension of filters that bend and tweak the appearance of models and influencers to transform their faces and bodies into younger, taller, more voluptuous fake people that gets more likes or "hearts."

With DreamActor-M1, TikTok users will be able to steal any photo of any person from any source and make their own videos as that person. Should another user wish to chat live, they can still be that person in the live interaction.

This process can be industrialized. Imagine a company set up in China or elsewhere applying a single video to a thousand photos to create a thousand "influencers," all saying the exact same thing.

You can also imagine the frauds that could be unlocked with real-time "deepfake" like video.

The widespread availability of technology like ByteDance's DreamActor-M1 will fundamentally reshape human interaction with visual media, collapsing the already fragile distinction between reality and simulation. By enabling hyperrealistic animation of any human image using simple inputs, this category of AI will democratize Hollywood-grade effects while weaponizing deception at scale.

Culturally, it will accelerate the meme-to-manipulation pipeline, where seemingly innocuous memes subtly introduce and normalize extreme or manipulative ideas.

Trust in the authenticity of videos will become contingent. An "expert" will be needed to verify not only photos and audio, but also videos. And people can easily reject such experts, just as people now reject experts about health, science, and politics if those experts disagree with their beliefs.

Innocent people victimized by fake video won't be able to prove they're innocent. Guilty people caught red-handed by authentic video will be able to credibly claim the video is fake.

Such AI will unleash a new world of microtargeting, where scammers, advertisers, political actors, and international malicious propaganda purveyors like the Russian government will be able to reach every person with a different person speaking a custom AI-generated message at very low cost.

Even before DreamActor-M1 is widely used, these problems are already growing at alarming rates. Deepfake detection startup RealityGuard claims a 900% increase in synthetic media since 2023, with 96% of it malicious.

The era when film and video showed you something, and you could believe it because the video was evidence that something happened is coming to an end.

And our homo sapien brains aren't ready for it.

Most people want their biases confirmed. They want to be desired by beautiful people online. They want to be beautiful people online. They want to witness the people they've been convinced to hate committing horrible acts. They want to witness the people they've been convinced to admire doing brave, honorable things. They want to see miracles and freaks and ghosts with their own eyes.

And technology like ByteDance's DreamActor-M1 will give 'em what they want.

More From Elgan Media, Inc.

The future of AI search is Google’s to lose

Unicorn Roast podcast: unruly teenage chatbots

Inside the war between genAI and the internet

Why you’ll speak 20 languages by Christmas

Finally: some truth serum for lying genAI chatbots

Where’s Mike? Silicon Valley, California

(Why I’m always traveling.)