The AI robots are watching... and learning

Physical reality is good, virtual reality is better, for robots learning from humans by example.

Humans are cultural animals, which means that we learn from each other and pass that knowledge on to others. Acquiring skills — from folding laundry to cooking to boxing — involves a teacher explaining while demonstrating. We learn by observing others.

Thanks to generative AI, robots are also gaining the ability to learn the way humans do — by watching YouTube videos.

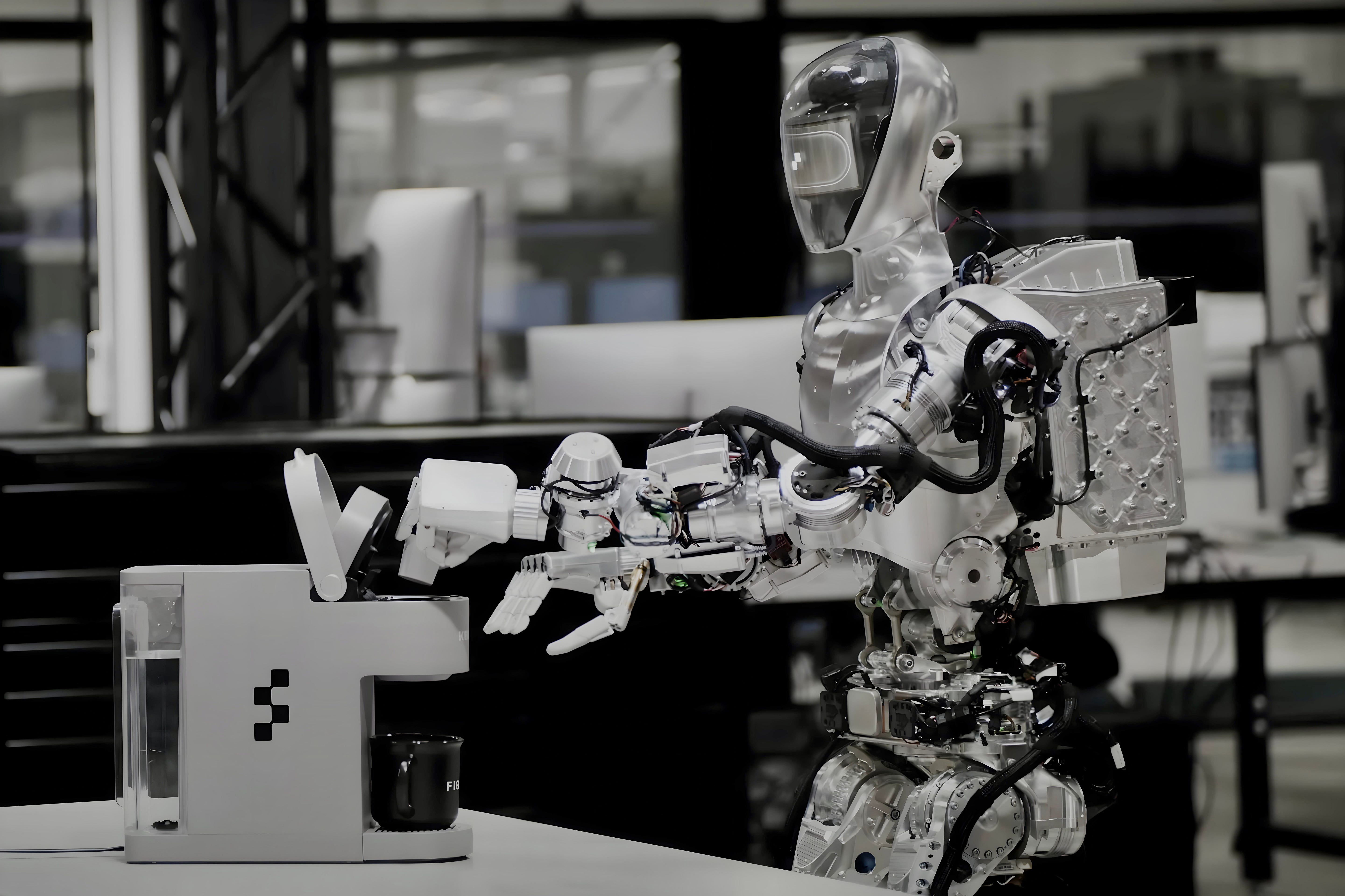

The robotics company Figure programmed humanoid robots to learn tasks by watching videos. In one demo, a robot was taught the extremely valuable skill of making coffee after watching videos for 10 hours.

Engineers at Carnegie Mellon University achieved something similar. The developed a method called the Visual-Robotics Bridge that enables robots to learn new skills by watching videos of humans performing tasks. In tests, the robots learned 12 new tasks in 200 hours of unsupervised binging — these includes opening drawers, opening cans with a can opener, picking up a phone and others.

While using YouTube videos is nice, combining visual learning with virtual reality is even better.

Researchers at Google DeepMind created an AI system that can learn new skills just by watching a software know-it-all in a simulated 3D environment. Google researchers tasked the AI to pass through colored spheres in the correct order. They put an agent “expert” into the environment that knew how to do this task. Not only did the AI “robot” learn from the expert, but first figured out that learning from the expert was the fastest way to learn.

Stanford's HumanPlus Project is also pioneering the training of Unitree's H1 humanoid robots by getting them to learn from people. The robots initially learn from watching people and also being fed pre-recorded human motion data. For the refinement of skills, they then leverage real-time shadowing of human operators to collect task-specific data that allows them to learn and imitate human skills directly from “egocentric vision” — video captured from a bodycam on the robot. So far, the robot has learned how to put on shoes, stand up, walk, unload boxes from a rack, fold clothes and type — pretty much matching all my skills. The researchers put all their code on GitHub, and the robot is also available for sale. That means you can buy a robot that will learn by watching for a little over $100,000.

This Early Days research into enabling robots to learn by example, points to a future where robots can learn things in a wide range of efficient ways.

One way is to feed video of an assembly line worker into a digital twin “Physical AI” factory in Nvidia’s Omniverse environment, enabling the robot to imitate the human and, by trial and error, learn how to do that job. The software could then be transferred into a real physical robot who could then do the same factory job.

Another mind-blowing concept could be a humanoid robot with AI approaching general intelligence, which could be simply turned loose on YouTube to learn all the skills demonstrated in a million videos on that platform, from baking bread to fixing the sink to putting up drywall. (Or, even more entertainingly, turn it loose on TikTok so it could learn to twerk and eat Tide Pods.)

Shameless Self-Promotion

The rise of AI-powered killer robot drones

What Sicily’s volcano brings to the table

Why Nvidia’s $3 trillion valuation might be too low

Check out all Elgan Media content!

My Location: Provence, France

(Why Mike is always traveling.)