The mainstreaming of AI glasses starts today

Meta's super great AI glasses got two Super Bowl commercials. Here's what happens next.

Meta is advertising its Ray-Ban Meta smart glasses today with two 30-second Super Bowl commercials in the first and third quarters. Meta spent more than $7 million per ad.

The first ad, which features Chris Hemsworth, Chris Pratt, and Kris Jenner, is already on YouTube. In the ad, Chris Pratt uses the Meta AI feature to identify Maurizio Cattelan's "Comedian" artwork in Kris Jenner's home.

(Meta is also selling a limited edition Super Bowl version of the glasses, a matte black Wayfarer design with either gold reflective lenses or lenses reflecting the colors of the Super Bowl finalists.)

The event represents to me the long-awaited mainstreaming of all-day, everyday AI access by what will eventually become a majority of the world's people.

It's also a big moment for Meta, which has ambitions for its facetop computer.

During the company's Q4 2024 earnings call, founder and CEO Mark Zuckerberg predicted that AI glasses can reach "hundreds of millions and eventually billions" of users. He said he finds it hard to imagine that within ten years, basically all eyeglasses and sunglasses will connect the wearer to AI.

I hope that dozens of companies offer hundreds of styles of glasses. But for now, Ray-Ban Meta glasses are the most consumer-friendly.

I wear my Ray-Ban Meta glasses every day, and I've been trying out its two most advanced (and, in fact, experimental) features: Live AI and Live Translation.

Live AI is turned on with a voice command and stays on, during which time video is streamed to Meta servers through the glasses. You can ask questions about what both you and the AI are seeing and hearing, and the AI tells you. This feature isn't as powerful as Google's demo of a similar feature in the lab, which Google calls Project Astra.

Live AI is less powerful, specific, and able with the information, but it does have the charming feature of existing, whereas Astra is totally unavailable to the public.

Live Translation is amazing. I'm in Sicily, and Italian is one of the currently supported languages (the other two are Spanish and French). I can turn on live translation, and whenever the glasses pick up the Italian language, the words are translated into English and spoken for me (and only me) to hear.

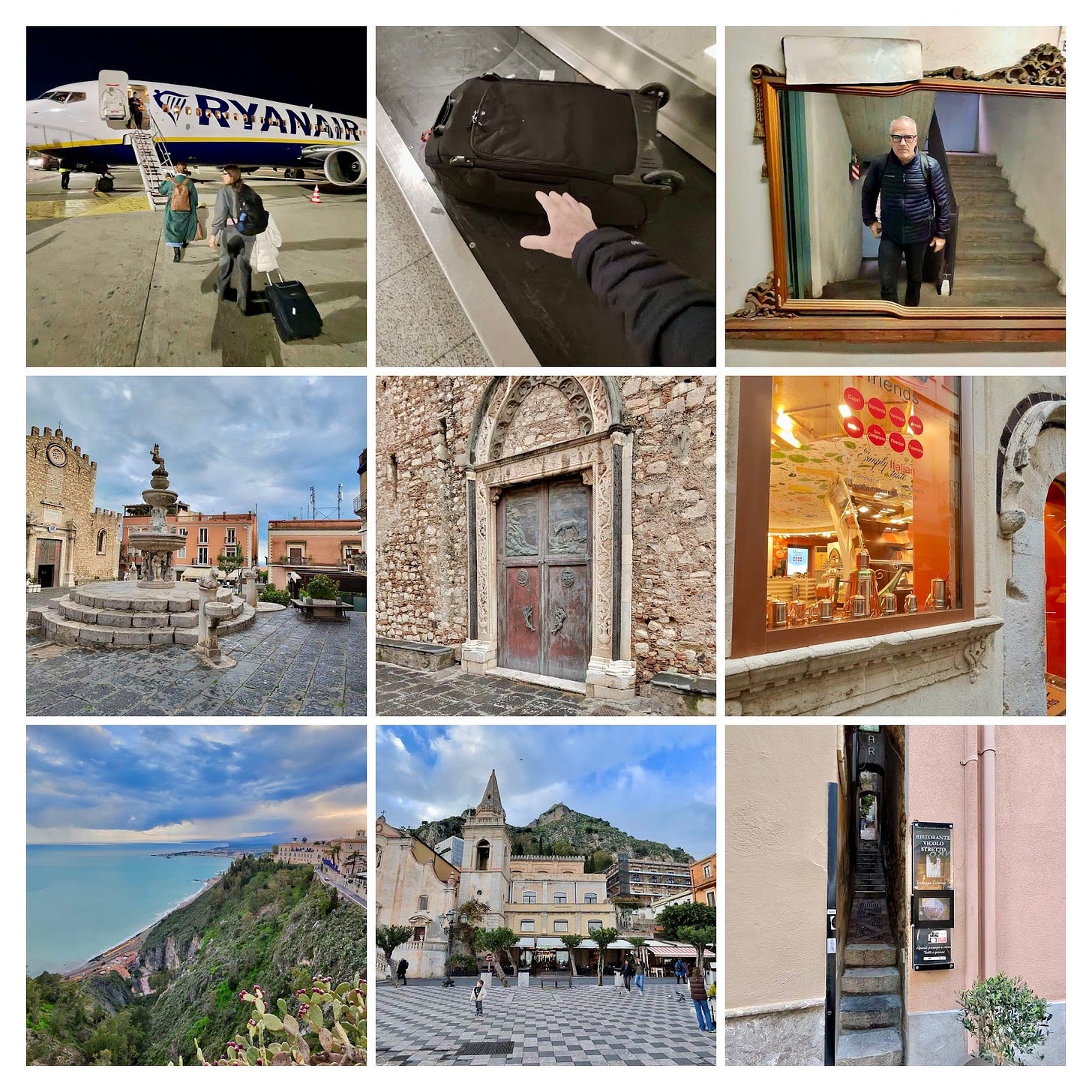

(All the photos in these two collages were taken by me in the last week via Ray-Ban Meta glasses in Sicily. I take so many pictures with these glasses, and it's so easy to take them, that the stream of photos becomes a lifelog.)

Beyond helping me understand my environment and Italian, Meta glasses read incoming messages to me while my phone stays in my pocket. I can ask all kinds of questions and get answers, hear turn-by-turn directions, listen to music and podcasts, take pictures and videos, and even live-stream to Instagram or Facebook, all hands-free, using glasses everyone around me thinks are just regular glasses.

And that's the magic: I hear without anything in my ears and use my phone without looking at or even touching my phone.

I bought transition lenses with my prescription, so they're regular glasses and sunglasses.

In short, Ray-Ban Meta glasses are amazing, thrilling and powerful to wear, and just about anyone who tries AI glasses like these will never want to go without them.

But the real takeaway here is not that Ray-Ban Meta glasses are great. What matters is that the human race is about to be augmented with all-the-time AI access on a massive scale, representing the biggest change to human culture since the smartphone.

Introducing the Unicorn Roast Podcast!

Hey, friends. I recently launched a brand new podcast you’re really going to love. It’s called Unicorn Roast. I’m co-hosting it with fellow tech journalist Emily Forlini. The podcast is a fun and very real weekly conversation about technology, society, culture and politics, but mostly tech. The podcast is free and ad-free, and we’ve decided to make our home page on Substack. Go here to subscribe free now.

Emergent Technologies

Toyota has hired the San Francisco-based game engine company Unity to create the user interface for its car dashboards | The US military’s latest boat is fast and stealthy, and the crew is optional | Lab-grown chicken has reached its ultimate destination: as dog food | Researchers grow living teeth in a jar, using combined human and pig cells | UC Berkeley researchers have developed a novel AI-powered training method that enables robots to master complex tasks with 100% accuracy in less than two hours | a new study found that LLMs, including those by Meta and Alibaba, can self-replicate to avoid being shut down | AI sewer drones are in development to work autonomously in sewers without human input | Researchers invent hydraulic robot blood that acts as liquid batteries |

More From Mike

Why I want glasses that are always listening

Apple is the latest company to get pwned by AI

Robots get their ‘ChatGPT moment’

Meta puts the ‘Dead Internet Theory’ into practice

Where’s Mike? Sicily!

(Why I’m always traveling.)

Love my Ray-Ban Metas. For cycling (hands free photo and videos) and listening to music and podcasts you cannot beat them. Pro tip: Turn off the always listening and turn on tap to activate and it saves lots of battery

The quality of the pictures are impressive. I don't have a Facebook account. Could I still use these glasses?